To take advantage of the parallel processing that Hadoop provides, we need to expe our query as a MapReduce job. After some local, small-scale testing, we will be able to run it on a cluster of machines.

MapReduce

Map and Reduce MapReduce works by breaking the processing into two phases: the map phase and the reduce phase.

Each phase has key-value pairs as input and output, the types of which may be chosen by the programmer.

The programmer also specifies two functions: the map function and the reduce function

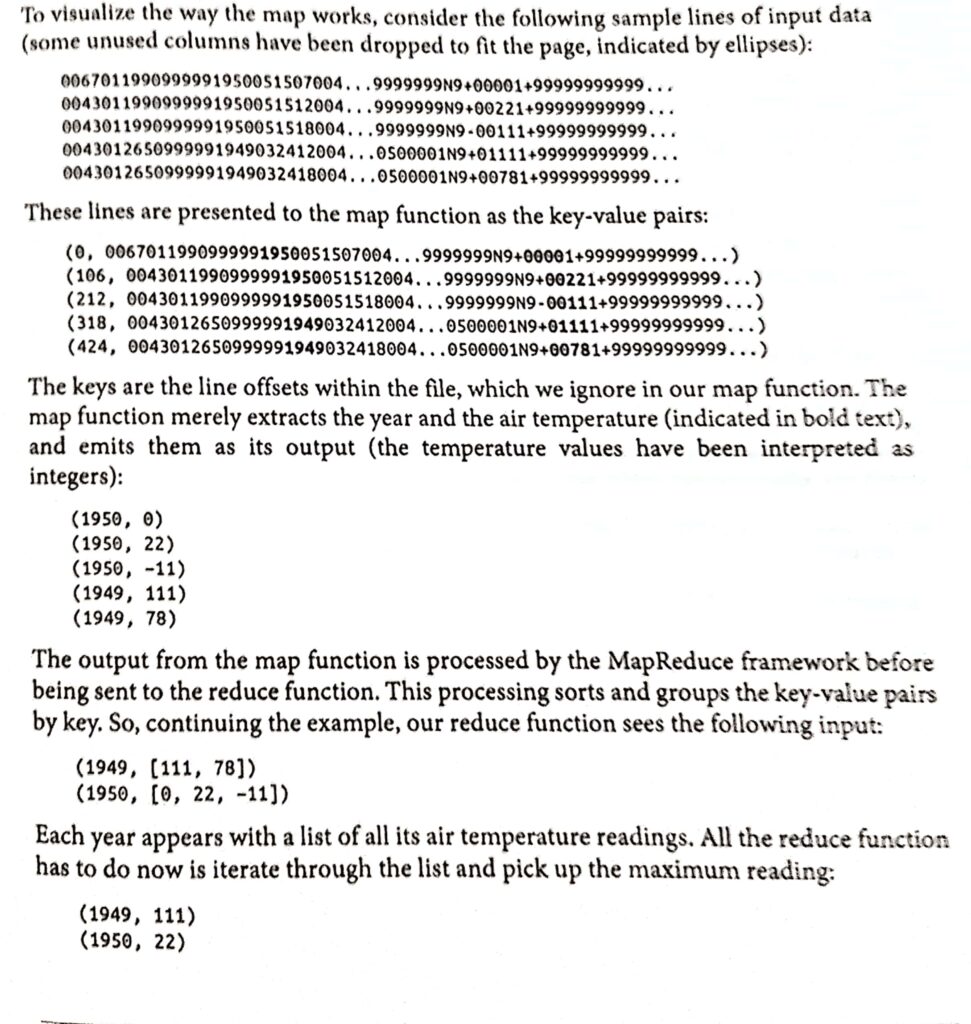

The input to our map phase is the raw NCDC data. We choose a text input format that gives us each line in the dataset as a text value.

The key is the offset of the beginning of the line from the beginning of the file, but as we have no need for this, we ignore it.Our map function is simple.

We pull out the year and the air temperature, because these are the only fields we are interested in.

In this case, the map function is just a data preparation phase, setting up the data in such a way that the reduce function can do its work on it: finding the maximum temperature for each year.

The map function is also a good place to drop bad records: here we filter out temperatures that are missing,suspect, or erroneous.To visualize the way the map works,