MapReduce is a programming model for data processing. The model is simple, yet not too simple to express useful programs in.

Hadoop can run MapReduce programs written in various languages; we look at the same program expressed in Java. Ruby, and Python.

Most importantly, MapReduce programs are inherently parallel, thus putting very large-scale data analysis into the hands of anyone with enough machines at their disposal.

MapReduce comes into its own for large datasets, so let’s start by looking at one.

A Weather Dataset

For our example, we will write a program that mines weather data.

Weather sensors collect data every hour at many locations across the globe and gather a large volume of log data, which is a good candidate for analysis with MapReduce because we want to process all the data, and the data is semi-structured and record-oriented.

Data Format

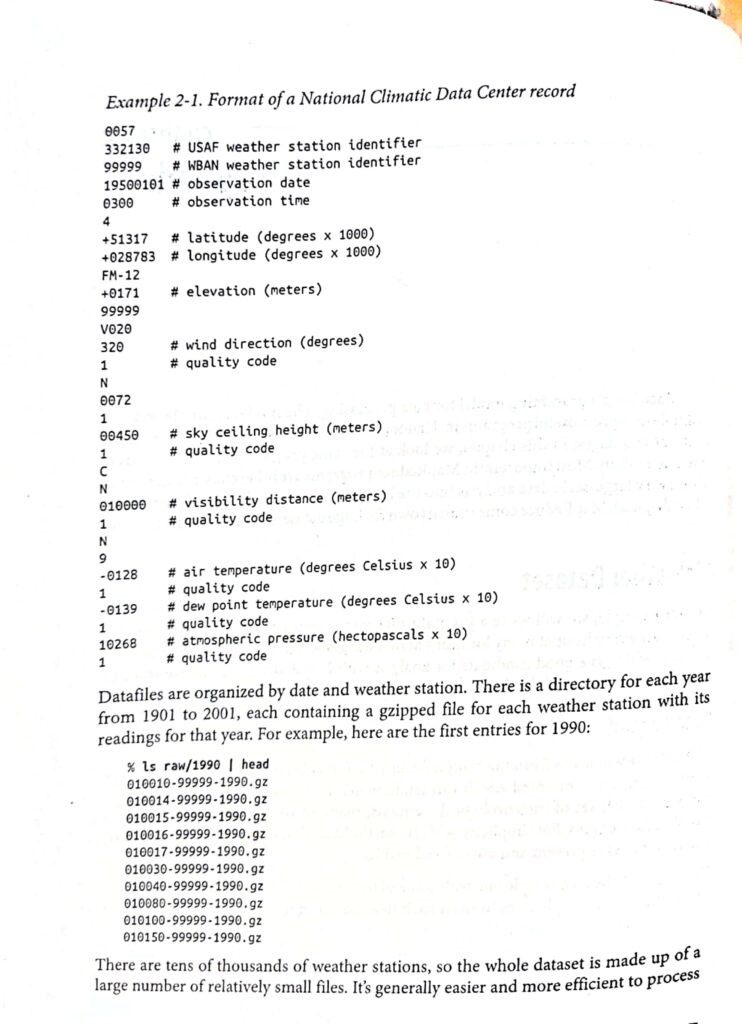

The data we will use is from the National Climatic Data Center, or NCDC. The data is stored using a line-oriented ASCII format, in which each line is a record.

The format supports a rich set of meteorological elements, many of which are optional or with variable data lengths.

For simplicity, we focus on the basic elements, such as temperature, which are always present and are of fixed width.

a smaller number of relatively large files, so the data was preprocessed so that each year’ readings were concatenated into a single file.